3. Proofs¶

3.1. Introduction¶

The preceding section of these notes introduced the language of predicate and constructive logic, but it left hanging the question of how to:

- construct proofs that you need

- extract information from proofs you already have

This section of the book answers these questions by teaching you how to construct and use proofs.

3.1.1. How to Design Proofs¶

In constructive logic, propositions are types and proofs are programs. To design a proof is thus akin to designing a program. Each such program is nothing but an application of a proof-constructing function, a.k.a, an inference rule, to arguments of the right types. Often, these arguments will include other proofs. The problem of designing a proof thus breaks into two sub-tasks.

3.1.1.1. The Problem to be Solved¶

Suppose you want to construct a proof, p, of some proposition, P. As you recall, P, a proposition, is also a type in constructive logic, so you can view your task as nothing other than constructing a term of type P. Such a term will always be in a specific form. It will always be a function application term, f a b c …, where f is a proof-building function (an inference rule); where a, b, c, etc., are values of the types f requires as its arguments; and where f when applied to such values returns a value, a proof, of the right type, namely a proof of P.

3.1.1.2. Step 0: Understand What Type of Proof is Required¶

3.1.1.3. Step 1: Select an Inference Rule¶

The first step is to select an inference rule, a proof-building function, to use to construct the proof you seek. You are highly constrained in your choice, because you must select an inference rule that constructs a proof of the required type. For example, if you require a proof of a proposition, a = b, then you have no choice but to select an inference rule that returns a value of this type: a proof of this proposition.

3.1.1.4. Step 2: Apply It to Arguments of the Right Types¶

Step 2: Having selected an inference rule to use to construct a proof of a given proposition (your goal), the second step is to apply the function to arguments of the right types. Often the arguments that are required are themselves proofs of other propositions. Having selected a rule to apply the problem is reduced to zero or more sub-problems: to construct arguments of the right types to which the selected inference rule can be applied to construct the final proof value.

3.1.1.5. Method: Top-Down, Type-Guided Refinement with Backtracking¶

The challenge, of course, is that it’s not always easy to come up with values of all the argument types required to apply an inference rule. This is true especially when an inference rule takes as arguments other proofs that you do not already have on hand. In this case, developing these smaller proofs, or lemmas, becomes the main challenge.

The crucial idea at this point is that you can give a complete proof, in the form of an application of the top-level inference rule, but leave holes in it where these arguments are required. Lean will then present them to you as sub-goals. And, now for the trick: You solve them in the same manner, by the recursive application of the same top-down, type-guided refinement technique.

If you get completely stuck, then you have discovered that perhaps you went do the wrong path, and you have to backtrack and try something else. So the overall approach is top-down, type-guided refinement with backtracking. That is how to design proofs.

3.1.1.6. Discussion¶

To start with, we have only the basic inference rules of natural deduction. These are the assembly language of proof construction. As we go along and prove richer and richer theorems, the proofs of those theorems, checked for logical validity by Lean, become higher-level inference rules that you can use later on to avoid re-doing a lot of low-level work.

Learning the set of basic inference rules and how you can compose them into larger proof-building functions to prove more interesting propositions is the essential aim of this chapter. As we go, we will develops a library of richer rules/functions/proofs. You will want to remember what proof-building rules we have at hand, including both the basic rules of natural deduction and the higher-level rules that we implement ourselves. Understanding these concepts is the main intellectual challenge in this section of the course.

To succeed, you must know the basic inference rules of natural deduction. You must be fluent enough in being able to recall and use to implement new proof-building functions to prove more complex and interesting propositions.

3.1.1.7. Introduction and Elimination Rules¶

The rules for using and constructing proofs, in ways that are logically valid, are known as elimination and introduction rules, respectively. The introduction and elimination rules together are known as the inference rules of a logic. Introduction rules are used to construct proofs of a particular form (using a particular connective or quantifier), while elimination rules are used to de-construct proofs of a particular form to obtain information contained within them. The inference rules that we will meet in this section of the notes are the fundamental rules for logically valid reasoning in the system of reasoning generally known as natural deduction. Natural deduction was invented long before automated proof assistants such as Lean were built. It is the framework on which such automated reasoning tools are based.

3.1.2. Structure of Each Following Chapter¶

The central idea on which this section of the course is organized is that each syntactic form of proposition has its own corresponding introduction and elimination rules. This section thus has one chapter for each form of proposition. Each of the following chapters is organized in the same way. It first reviews the form of proposition that is the subject of the chapter, and its intended meaning. The heart of each chapter presents the corresponding introduction and elimination rules and how these rules enforce the intended meanings of the connective or quantifier being considered. Each inference rule is presented in a textual form, using what we will just call inference rule notation. Then at the core of each chapter is a presentation of each inference rule as a function in Lean. We introduce additional related concepts in the context of these chapters. Each chapter wraps up with an explanation of how one would write informal proofs using the given inference rules.

Whether one is using Lean or not, these are the principles of logical reasoning by which one constructs and uses proofs of propositions. To learn proofs requires fluency with these rules. The aim of this section of the course is to develop fluency, not just a superficial understanding of, but the ability to apply, these rules.

3.1.3. Inference Rules are Functions in Constructive Logic¶

In constructive logic, inference rules are formalized as functions. They are define in just the right ways to enforce the intended interpretations of corresponding forms of propositions. Introduction rules (functions), for constructing proofs, generally takes arguments of certain types, often proofs of other “smaller” propositions, as arguments, enforcing the constraint that certain things must be true (and there are proofs) before proofs of “bigger” propositions can be formed. Similarly, elimination rules take proofs as arguments and can only extract and return certain values from them. The Lean type checker is the enforcer of logical consistency!

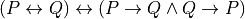

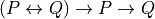

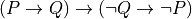

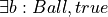

For example, the introduction rule (function) for constructing a proof of  , requires that proofs of

, requires that proofs of  and of

and of  be given as arguments. The type checker will reject proof values that are not of the required types, e.g.,

be given as arguments. The type checker will reject proof values that are not of the required types, e.g.,  and

and  . The upshot is that it’s not possible to construct a proof of

. The upshot is that it’s not possible to construct a proof of  in Lean unless one already has individual proofs of

in Lean unless one already has individual proofs of  and of

and of  . A proof of a conjunction thus guarantees that proofs exist for each conjunct, enforcing the meaning of the and connective.

. A proof of a conjunction thus guarantees that proofs exist for each conjunct, enforcing the meaning of the and connective.

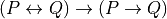

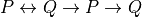

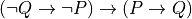

Similarly, from a given proof of  , one can apply elimination rules (functions) to obtain individual proofs of

, one can apply elimination rules (functions) to obtain individual proofs of  and

and  . These are the return types of the two elimination rules for conjunctions. In general it is not possible to derive a proof of some other proposition, say

. These are the return types of the two elimination rules for conjunctions. In general it is not possible to derive a proof of some other proposition, say  , from no more than a proof of

, from no more than a proof of  as there is no rule available for doing this.

as there is no rule available for doing this.

In traditional paper-and-pencil discrete math courses, there is often a strong emphasis on learning what are called proof strategies. For example, proof by negation is a strategy for proving negations: propositions of the form not P. It works by assuming that P is true, showing that that leads to a contradiction, and then concluding from that that P must be false (i.e., that not P is true). We will discuss these so-called proof strategies in this chapter. What you will see, however, is that what they really amount to is nothing other than the application of certain inference rules, often with the result of transforming a current proof goal (a proposition to be proved) into a different form, in a valid way, taking you one step closer to having a complete proof.

3.1.4. Aims of this Section of the Course¶

In a nutshell, then, for each of the syntactic forms of proposition, there is an associated set of proof construction (introduction) and information extraction (elimination) rules. Proof strategies are just inference rules. The overarching objective of this section of the course is to have you deeply understand, remember, and be able to apply all of these rules. You will often need to apply combinations of rules/functions, to construct proofs. You will come to feel that constructing proofs is very much like (and it is!) constructing programs: ones that take arguments of certain kinds, including proofs, and that return values of other kinds, including proofs. Whether you are doing informal proofs on paper and pencil or using Lean or another proof assistant, you will use these rules of reasoning. All of mathematics, logic, and computer science depend on them. You should make mastery of these rules your learning goal for this section of the course.

Inference rules are functions, and proofs are obtained by applying such functions to the right kinds of arguments. Constructing proofs of propositions turns out to be a programming problem! One must produce a program that constructs a proof of a proposition to be proved. A proof is a value logical type, of the proposition, that is the return type of the function.

The remainder of this chapter starts with proofs of equality propositions, then moves on to universal generalizations; false and true; conjunctions; implications; bi-implications (equivalences); disjunctions; negations; and finally existentially quantified propositions.

3.2. Equality¶

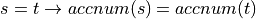

In mathematical and logical reasoning, we often need to express the proposition that two terms are equal, or not. For example, in specifying how an online system should work, we might want to state that if s and t are terms that represent the same Person, then the account numbers associated with s and t must be the same. We could write this in logic with an expression something like this:  . In this chapter, we present the concept of equality precisely as it is formalized in typed predicate and constructive logic.

. In this chapter, we present the concept of equality precisely as it is formalized in typed predicate and constructive logic.

There are two basic issues to understand. The first is how to use what Lean calls the eq predicate, which takes two (explicit) arguments, such as s and t, to form propositions of the form, s = t. The second issue is to understand the inference rules available to construct and use proofs of such propositions.

3.2.1. Formula¶

In a typed predicate logic, equality is a predicate. In Lean, the predicate is called eq. This predicate takes three arguments: a type, T, and two terms, s and t of type T (for whatever type might have been given for the argument T). Lean infers the value of T from the arguments s and t, and so is never given as an explicit argument. To form a proposition using eq, one thus just applies it to two arguments, which must be of the same type. The result of the #check command confirms that anEqualityProposition is a proposition.

Here’s an example. We assign to anEqualityProposition the proposition, 1 = 1. In this case, Lean infers that the value of the type argument, T, is nat. The #check command confirms that anEqualityProposition is a proposition. On the other hand, trying to form the proposition that 1 = tt doesn’t work at all, because the two terms are not of the same type.

def anEqualityProposition := eq 1 1

#check anEqualityProposition

def noCanDo := eq 1 tt

For each and every type, T, the eq predicate can thus be used to form propositions of the form eq s t, which can also be written as s = t. The expression s = t is defined to be a notation that means exactly eq s t. Here’s a formal statement of this proposition, along with a proof of it, constructed incrementally using a Lean tactic script, starting and ending with the keywords, begin and end, respectively. We will cover proof scripts in increasing depth and detail in this section of these notes.

example: ∀ T: Type, ∀ a b: T, (eq a b) = (a = b) :=

begin

intros,

exact rfl

end

Many functions and predicates defined in Lean’s libraries, which are automatically imported when we use Lean, have convenient notations. For example, the term 1 + 2 is just a nice notation for nat.add 1 2, where nat.add is the name of the function defined in Lean’s libraries for computing sums of natural numbers.

Here are a few examples where we form equality propositions using the = notation rather than eq. These examples also show that we can form equality propositions for objects of arbitrary types, as long as the objects on the two sides of the = sign are of the same type. (A kind of exception is that if Lean knows of a way to a value of one type into a value of another type, then you can write equalities involving both types and Lean will do the coercion for you.) The last line of this code block is an example where Lean issues a type error, which expresses that Lean found no way to covert the Boolean value, tt into a value of type nat.

#check eq 0 0 -- a proposition

#check 0 = 0 -- same, nicer notation

#check 1 = 1 -- another proposition

#check 0 = 1 -- a third proposition

#check tt = ff -- involving booleans

#check "Hi" = "H" ++ "i" -- strings

#check 1 = tt

3.2.2. Interpretation¶

What we want a proposition, s = t, to mean is that the two terms reduce to the same values. In the second to the last example above, for instance, we want it to be the case that "Hi" is treated as being equal to (the string denoted by the the function application expression) "H" ++ "i", because they mean the same string.

Whether values are written directly or indirectly by the application of functions to arguments, if they are the same value, then we want it to be true, which means that we want to be able to construct a proof, that s = t. In any other where s and t do not represent exactly the same value, we want it to be impossible to construct such a proof. It is the provisioning of specific introduction (proof construction) and elimination (proof utilization) rules that enforces this, our intended, interpretation of equality propositions. We now turn to these inference rules, starting with the single introduction rule, which you can now think of as a function for producing proofs of equality propositions.

3.2.3. Introduction¶

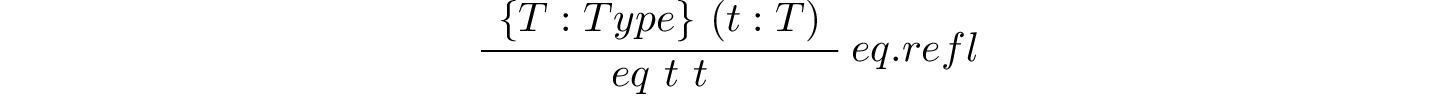

We previously mentioned that introduction rules are used to introduce or construct proofs of a particular type. The natural deduction reasoning system provides us with one such inference rule for constructing proofs of equalities: eq.refl. The eq.refl rule is used to construct or introduce a proof that two objects are equal.

Let’s unpack the name of this rule/function. This name has two parts, eq and refl. In this context, eq is a namespace associated with the eq predicate, and refl is the name of the proof-building function, defined within this namespace. To use the function from outside the namespace, one must either open the eq namespace, which we will not do, or use the qualified name, eq.refl.

3.2.3.1. Proving t = t¶

This inference rule, a function, takes two arguments: a type, T, and a single value, t, of type, T. It then constructs and returns a proof of t = t. In natural deduction, and so in Lean, this is the only way to construct proofs of an equality. Here’s an example in which we assert that the proposition, 1 = 1, is true, by asserting that we can bind a proof to an identifier, one_eq_one, of this type (remember, propositions are types), and then showing that we can do this by using eq.refl to construct a proof of (a value of type) 1 = 1. The #check command confirms that one_eq_one really is now bound to a value (namely eq.refl 1) of type (a proof of) 1 = 1.

def one_eq_one : 1 = 1 := (eq.refl 1)

#check one_eq_one

EXERCISE: Explain why you could never use eq.refl to construct a proof that that two different values are equal.

3.2.3.2. Proving s = t (different terms for same value)¶

So, how does this work when applied to an equality proposition with two different terms that nevertheless mean the same thing? Consider the terms, s := 2 and t := 1 + 1. The trick to understand that if one writes s = t, then Lean evaluates s and t before producing the proposition against which a proof is type checked. So if s and t reduce to the same value, then it will be possible to prove s = t, using either eq.refl s or eq.refl t as a proof.

#check eq 2 (1 + 1)

#reduce eq 2 (1 + 1)

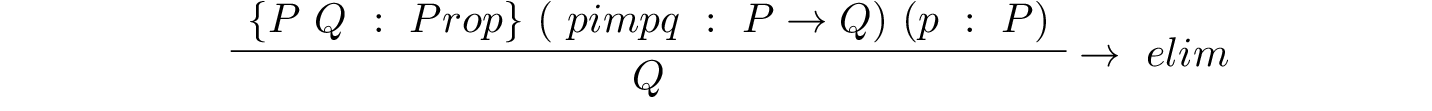

3.2.3.3. Inference rule notation¶

In logical writing, and also in such computer science fields as programming languages and software verification, inference rules, which are really just functions, are presented in a stylized form. Here is the eq.refl presented in this textual inference rule style. What is says is that if you apply eq.refl to a value, t, of some type, T, where the curly braces signal that type inference will be used to infer the value of T (from t), then the return value will be of type eq t t. That is, it will be a proof of t = t.

Here are a few more examples.

def z_eq_z : 0 = 0 := eq.refl 0

def z_eq_z' : 0 = 1 - 1 := eq.refl 0

def z_eq_z'' : 0 = 1 - 1 := eq.refl (1 -1)

def ff_eq_ff : ff = band ff tt := eq.refl ff -- boolean and

def hi_eq_hi : "Hi" = "H" ++ "i" := eq.refl "Hi"

3.2.3.4. Using rfl as a shorthand¶

In many cases, Lean can infer not only T, but even the value t, from context, namely from the type of proof that is to be produced. A version of the eq.refl rule that works this way is called rfl. It’s easier to type and to read than eq.refl t, and so is preferred when it works. Here are the preceding examples using rfl.

def z_eq_z : 0 = 0 := rfl

def z_eq_z' : 0 = 1 - 1 := rfl

def z_eq_z'' : 0 = 1 - 1 := rfl

def ff_eq_ff : ff = band ff tt := rfl

def hi_eq_hi : "Hi" = "H" ++ "i" := rfl

EXERCISE: Explain precisely why eq.refl (or rfl) can never be used to derive a proof of 0 = 1.

3.2.3.5. Inference rules are functions¶

As show now be clear, inference rules in natural deduction, and thus in Lean, act like, and literally are, functions. You must provide arguments of the right types, and in return they produce values, here proofs, of desired kinds: in this case, proofs of equalities.

In many cases, inference rules will require not only types and computational values as arguments, but also proofs of previously proved or axiomatically accepted propositions. As we discussed above, for example, the introduction rule for constructing a proof of a conjunction requires proofs of the two conjuncts as arguments. Because Lean is strongly and statically type-checked, it will not permit the application of an inference rule unless valid value, including such proofs, are given for all arguments. In this way Lean enforces the consistency, the correctness, of logical arguments, formulated as proofs. So, you can now think of eq.refl in two different ways: as a logical inference rule, and as a proof-constructing function.

3.2.3.6. Top-Down, Type-Driven Refinement¶

We’ve now seen that we can prove a proposition by giving an exact proof term. Here we prove the proposition, 0 = 0, by giving an exact proof term (a value of a propositionall type).

lemma zeqz : 0 = 0 := eq.refl 0Just as functions definitions in ordinary programming can be pretty complex, proof terms can be, too. They can be so complex that they are very difficult to just write out all at once. Of course this is true of ordinary functions as well.

A fundamental approach to dealing with the complexity of definitions that we need to produce is to use abstraction. Abstraction is selective emphasis on the most important details of a solution, leaving holes where certain details will be filled in later. They key is to be sure that the overall solution structure works, and that it will lead to a good solution as long as those holes can be filled in with appropriate details later. One then proceeds to use the same approach to fill in each hole.

In Lean, the type of value needed in a given context is always known. The type of an overall proof term is just the type defined by the proposition to be proven (e.g., 0 = 0). We know that there’s only one type of proof that will do here. It’s the application of the eq.refl inference rule to some value. That some value is a hole! And holes have types. In this case, the definition of eq.refl requires that the hole be filled with a value of type, nat. Lean infers that from type of the values (the zeros) in the proposition. One can now proceed to work to achieve the sub-goal of filling that hole with a term of the right type. In this case, it’s a term of type, nat, and in particular what’s needed is the value 0.

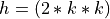

it’s hard to write an exact proof term (here,

eq.refl 0). In these cases it can help to figure out a proof term step by step, in a type-drive, top-down, structured approach. Lean supports such stepwise development of proof terms. One can include underscores in proof terms as tactic-based proving. Here’s an equivalent tactic-based proof.In Lean, this approach to using top-down, type-driven refinement to develop proofs, or even ordinary functions or other values, can be done in at least two ways. One way to do it is to write out proof terms using underscore characters for the holes. Lean will show each one as a goal that remains to be achieved before the overall term is finished. Another equivalent way is to use tactic scripts to construct proofs. Let’s see that now.

First, with incomplete proof terms.

example : 0 = 0 := _

Now with a tactic script.

example : 0 = 0 :=

begin

end

Now open the Lean Messages panel (if it’s not already open) by typing control-shift-enter or command-shift-enter (Windows/Mac). Now place your cursor first at the start of the “exact”. The message window will display the “tactic state” at this point in the script. The state say that nothing is assumed and the remaining goal to be proved is 0 = 0. Now move your cursor to the next line, before the end. The tactic state is empty, nothing is left to be proved, QED.

Define zeqz’’ as also being of type0 = 0, but after the:=, just write begin on the next line and then an end on the following line. You need to type the begin and end lines before continuing. Put a new line between the begin and end lines, and then insertapply ex.refl,(do not enter0) and see what happens. In Lean you can “apply” inference rules with incomplete information. Sometimes Lean will complete the proof with information it can infer, but often it will change the proof state to a new state requiring you to prove additional information. This approach is useful when a single inference rule will not complete the proof.

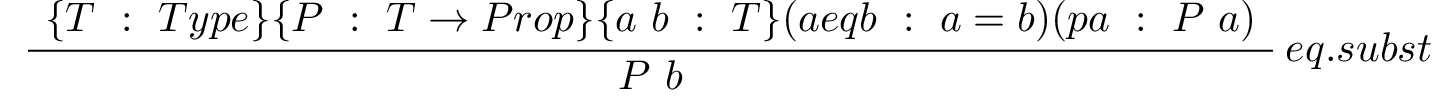

3.2.4. Elimination¶

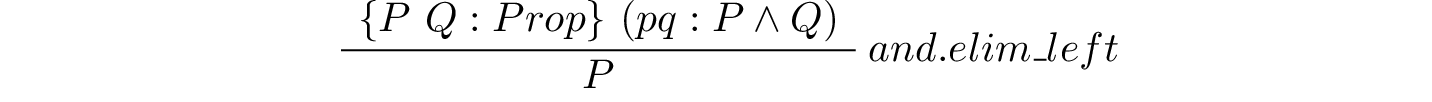

Whereas introduction rules give us ways to construct proofs that we need, elimination rules give us ways to use proofs that we already have. As we previously mentioned, elimination rules are used to extract information from proofs of a particular type, so an equality elimination rule will be used to extract information from a proof that two objects are equal.

Suppose we have a proof of some proposition involving s, and we also have a proof of s = t. To be concrete, suppose we have a proof of Kevin is from Charlottesville and we also have a proof of Kevin = Tom, maybe because Kevin uses Tom as an alias. Then we should be able to take advantage of (use) the proof that Kevin = Tom to convert the proof that Kevin is from Charlottesville into a proof that Tom is from Charlottesville.

This concept is formalized by the inference rules that in Lean is called eq.subst. Here is the rule itself, presented in inference rule style.

This rule takes six arguments, the first four of which are inferred. When applying the rule one gives only the last two arguments explicitly. The first four arguments are a type, T; a predicate, P, on values of type, T ( ); and two values, a and b of type T. The last two arguments (from which the preceding four are inferred), are, first, a proof of the proposition, a = b, and second, a proof of the proposition, P a. The latter is the proposition that a has the property represented by the predicate, P. What the rule returns is a proof of the proposition, P b, that b also has this property.

); and two values, a and b of type T. The last two arguments (from which the preceding four are inferred), are, first, a proof of the proposition, a = b, and second, a proof of the proposition, P a. The latter is the proposition that a has the property represented by the predicate, P. What the rule returns is a proof of the proposition, P b, that b also has this property.

Here’s an example in which we formalize the informal argument about Kevin and Tom above.

axioms (Person : Type) (Kevin Tom : Person)

axiom kevinIsTom : Kevin = Tom

axiom isFromCville: Person → Prop

axiom kevinIsFromCville: isFromCville Kevin

example : isFromCville Tom :=

eq.subst kevinIsTom kevinIsFromCville

You can see that eq.subst is an elimination rule for eq propositions because it requires one to provide, to already have, a proof of a = b as an argument. This proof is then used, along with the additional proof of P a, to construct and return a proof of P b.

3.2.5. Properties¶

From the basic introduction and elimination rules for equality, one can deduce that the equality relation has several additional important properties. In particular, equality is reflexive, symmetric, and transitive, making it also what we call an equivalence relation.

3.2.5.1. Reflexivity¶

A relation in which everything is related to itself is said to be reflexive. Equality is reflexive in the sense that, for any value, a, of any type, T, we can obtain a proof of a = a. Every object is equal to itself. We can always construct a proof of a = a no matter what a is. To do this, we use eq.refl, or its shorthand form, rfl.

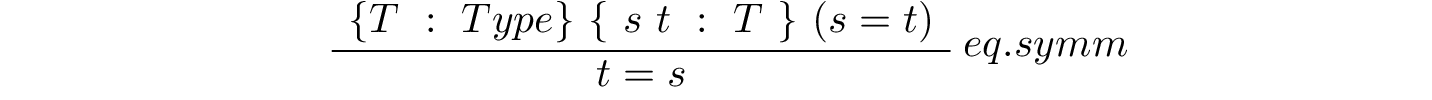

3.2.5.2. Symmetry¶

A relation in which if a is related to b then b is also related to a is said to be symmetric. Equality is symmetric in the sense that for any two objects, a and b, if a = b then b = a. What this means in Lean is that if we’re given a proof of a = b, we can apply a rule, not surprisingly called eq.symm, to obtain a proof of b = a.

Let’s see it in action in five lines of code.

def a := 1

def b := 2 - 1

lemma ab : a = b := rfl

#check ab -- a proof of a = b

#check (eq.symm ab) -- a proof of b = a!

What we see is that eq.symm is really just another function that performs this logical inference automatically for us.

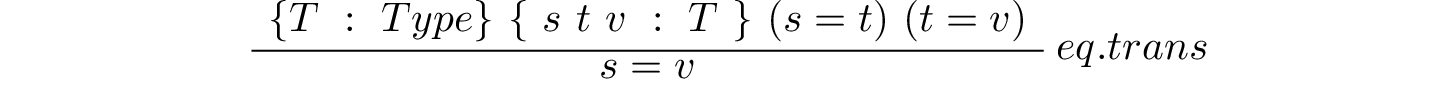

3.2.5.3. Transitivity¶

Finally, a relation is said to be transitive if a = b, and if b = c, then a = c.

Here’s an example in Lean.

axiom T : Type

axioms (a b c : T) (ab : a = b) (bc : b = c)

example : a = c := eq.trans ab bc

3.2.5.4. Equivalence¶

A relation, such as eq, that is symmetric, reflexive, and transitive is said to be an equivalence relation. We will discuss equivalence relations in more detail later in this course.

For now it suffices to observe that equality is an equivalence relation on terms. Terms that reduce to the same value are equivalent under Lean’s definition of equality, even if they are not expressed in exactly the same form. So, for example, 2 is equivalent to 1 + 1, to 4 - 2 and to an infinity of other terms under the equality relation.

An equivalence relation divides up the world into sets of values that are all equivalent to each other. We call such sets equivalence classes. Equivalence classes are disjoint in the sense that objects in one equivalence class are never equivalent to objects in a different equivalence class.

EXERCISE: Explain why equivalence classes must be disjoint.

3.2.6. Proof Irrelevance¶

An interesting case of equivalence is at the heart of Lean. In general, there are many ways to prove a given lemma or theorem. Each proof is nevertheless of the type of the proposition that it proves, and each suffices as evidence to justify a truth judgment for the proposition. In cases where one’s aim is simply to prove a proposition, the particular proof object that is used doesn’t matter. We say that the particular proof is “irrelevant.”

In Lean, different proof terms for the same proposition type are considered to be equal. So all proofs of a given proposition are equivalent (equal) in this sense, even if they are expressed in different ways.

EXERCISE: Demonstrate that different proofs of the same proposition are equivalent with an example.

3.2.7. Formalization (Optional)¶

In Lean, you can use the #print command to see the definition of any term. Let’s see how eq is defined.

#print eq

Consider the first first line: inductive eq : Π {α : Sort u}, α → α → Prop. The inductive keyword indicates that a new type, or in this case a way of creating new types, a family of types, is being defined. The funny Pi symbol can be read as saying “for any”, so Π {α : Sort u} can be read as saying, “for any Sort,  , whether Sort 0 (Prop), Sort 1 (Type), etc. The whole first line then says that eq is a kind of function that takes what we can refer to simply as some type,

, whether Sort 0 (Prop), Sort 1 (Type), etc. The whole first line then says that eq is a kind of function that takes what we can refer to simply as some type,  , and two values of this type, and that returns a proposition, which is itself a type. The curly braces indicate that the type is inferred from the other arguments. For example, the term eq 1 2 can now be seen as being (reducing to) a proposition, written simply as eq 1 2 or as 1 = 2.

, and two values of this type, and that returns a proposition, which is itself a type. The curly braces indicate that the type is inferred from the other arguments. For example, the term eq 1 2 can now be seen as being (reducing to) a proposition, written simply as eq 1 2 or as 1 = 2.

Now we wish to enforce an interpretation of such proposition as asserting that the two values are equal. So we want there to be a proof of such a proposition if and only if the next two arguments are equal, at least when reduced. To this end, the definition of eq provides a means, a constructor, for building proofs of such propositions. It is called eq.refl. It takes one argument, a, of type  , where

, where  is inferred from a. It then returns a value of type a = a, which is of type, i.e., that is a proof of, that proposition, eq a a. This constructor is the one and only introduction inference rule for equality. Because there are no other constructors for values of this eq type, nothing can be proved equal to itself that isn’t equal to itself! In this way, we use the definitions of inference rules to enforce our desired interpretations of given forms of propositions, here propositions that assert equalities between terms.

is inferred from a. It then returns a value of type a = a, which is of type, i.e., that is a proof of, that proposition, eq a a. This constructor is the one and only introduction inference rule for equality. Because there are no other constructors for values of this eq type, nothing can be proved equal to itself that isn’t equal to itself! In this way, we use the definitions of inference rules to enforce our desired interpretations of given forms of propositions, here propositions that assert equalities between terms.

3.2.8. Natural Language¶

Natural language proofs involving easy equalities are usually written very concisely. A proof of t = t would usually be written as something like this: “It’s trivially the case that t = t.”

In fact, you can write a proof like this in Lean using a proof tactic. A tactic in Lean is a program that looks at what information you have that can be used (called a context) and what is to be proved (called a goal), and, based on its programming, tries to help you toward having a proof. Tactics thus automate certain aspects of proof construction.

axioms (T : Type) (a : T)

example : a = a := by trivial

3.2.9. Exercises¶

- First, write a textual inference rule, let’s call it eq_snart. It says that if T is any type; if a, b, and c are values of this type T; and if you are given proofs of a = b and c = b (notice this is not b = c), then you can derive a proof of a = c.

- Now “prove” that this rule is valid by implementing it as a function that, when given any argument values of the specified types, returns a proof of the specified type (of the conclusion).

Hint: Use eq.trans to construct the proof of a = c. Its first argument will be ab, the proof that a = b. Its second argument has to be a proof of b = c for it to work to derive a proof of a = c; but all we’ve got is a proof of c = b (in the reverse order). How can we pass a second argument of type b = c to eq.trans, so that it can do its job, when what we have at hand is a proof of c = b. Now a major hint: we already have a way to construct a proof of b = c from a proof of c = b. Just use it.

Ignore the error message in the following incomplete code. The problem is simply that the definition is incomplete, due to the underscore placeholder. Replace the underscore with your answer. Leave parenthesis around your expression so that it gets evaluated as its own term.

def eq_snart { T : Type} { a b c: T } (ab: a = b) (cb: c = b) : a = c := eq.trans ab (_)

- Use Lean to implement a new rule that that, from a proof of c = b and a proof of b = a, derives a proof of a = c. Call the proof eq_snart’.

- Use eq_snart rather than eq.trans directly to prove a = c, given proofs of a = b and c = b. You’ll have to insert your definition of eq.snart into the following code if it’s not already present, and then replace the

_ _with the appropriate terms:

axiom T : Type axioms (a b c : T) (ab : a = b) (cb : c = b) #check cb lemma aeqc : a = c := eq_snart _ _

3.3. Forall¶

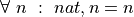

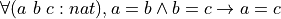

We often want to assert that something is true for every value of some type. To do this, we use a universally quantified proposition. As a simple example, we might state, in English, that every natural number is greater than or equal to zero. To write such a proposition formally in predicate logic, we use the universal quantifier symbol,  . Here’s our natural language statement formalized, and checked for syntactic correctness, in Lean.

. Here’s our natural language statement formalized, and checked for syntactic correctness, in Lean.

#check ∀ n : ℕ, n ≥ 0

3.3.1. Formula¶

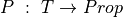

More generally, if T is any type and  is a predicate on values of type, T, then

is a predicate on values of type, T, then  is a proposition. It can be read as “Every value t, of type, T, satisfies P,” or “Every t has the property P. A proposition of this form is said to be universally quantified.

is a proposition. It can be read as “Every value t, of type, T, satisfies P,” or “Every t has the property P. A proposition of this form is said to be universally quantified.

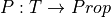

We can express these statements precisely in Lean. In the following code, the first line can be read as saying, “Assume T is any type and that P is a predicate over values of that type.” The proposition in the second line is then read as saying that every value, t of type T has property P. Lean confirms that we have given a well formed proposition.

axioms (T : Type) (P : T → Prop)

#check ∀ (t : T), P t

EXERCISE: Write an analogous piece of Lean code in which you first assume that Frog is a type and that isGreen is a predicate that asserts that any given frog is green. Then write a universally quantified proposition asserting that every frog is green.

-- Write your logic here

3.3.1.1. The Two Parts of a Universally Quantified Proposition¶

Such a universally quantified proposition has two parts. Before the comma, the  symbol binds a name to an arbitrary value of a specified kind. After the comma, a predicate asserts that some proposition about that arbitrarily selected value is true. The overall effect is to assert that the proposition is true no matter which value is selected, and thus that it is true for every value of the specified kind.

symbol binds a name to an arbitrary value of a specified kind. After the comma, a predicate asserts that some proposition about that arbitrarily selected value is true. The overall effect is to assert that the proposition is true no matter which value is selected, and thus that it is true for every value of the specified kind.

In our example above asserting that every natural number is greater than or equal to zero, the name is n, and it binds to an arbitrary value of type nat. While the concept of a binding of a name to an arbitrary value may sound mysterious, it’s really not. When we specify a function, such as  , the argument name can be thought of as being bound to an arbitrary value of the specified type. When we write the body of the function, we assume that the name is bound to some value of the specified type. It is only when we apply the function to a particular value that we bind the name to a specific value: the value to which the function is being applied. In other words, we write code all the time assuming that argument names are bound to arbitrary values of specified types.

, the argument name can be thought of as being bound to an arbitrary value of the specified type. When we write the body of the function, we assume that the name is bound to some value of the specified type. It is only when we apply the function to a particular value that we bind the name to a specific value: the value to which the function is being applied. In other words, we write code all the time assuming that argument names are bound to arbitrary values of specified types.

After the comma in a universally quantified proposition comes a predicate or a proposition. You can think of this as being analogous to the body of function. We are not required to use the argument in the body, but we typically do. In practice, what appears after the comma is thus almost always a predicate in which the name bound by the  appears as an argument. Such a predicate thus asserts that something is true about that arbitrary value of n, and therefore it it true for every such n.

appears as an argument. Such a predicate thus asserts that something is true about that arbitrary value of n, and therefore it it true for every such n.

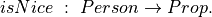

EXERCISE: Suppose we have a type, Person, and a predicate,  Write a universally quantified proposition asserting that everyone is nice. In Lean you can write forall (followed by a space) to get the

Write a universally quantified proposition asserting that everyone is nice. In Lean you can write forall (followed by a space) to get the  character.

character.

axiom Person : Type

axiom isNice : Person → Prop

-- fill in the _ hole with your answer

#check _

EXERCISE: Write a similar block of code in which you first assume (using axiom) that isEven is a predicate on the natural numbers (taking a natural number as an argument); then write a universally quantified proposition that asserts that every natural number is even.

-- your assumption here

-- now fill in the hole

#check _

EXERCISE: In Lean, write a proposition that asserts that every natural number is equal to itself.

-- Fill in the hole with your answer

#check _

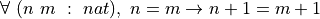

3.3.1.2. Nested Quantifiers and Accumulating Context¶

The second part of a universally quantified formula can itself be a universally quantified formula. In such cases, bindings accumulate as one gets more deeply nested. Consider the following example.

1 2 3 4 | def allNatsAreEqual :=

∀ (n: nat),

∀ (m : ℕ),

n = m

|

Here we assert that every natural number, n, is equal to every other natural number, m. This proposition isn’t true, of course, but it’s still a proposition.The point of the example is to see how quantified expressions can be nested and to understand how bindings accumulate. We break the overall proposition across several lines and use indentation to indicate that the second “forall” expression is to be understood in the context of the binding established by the first “forall” above it. On line 2, we bind n to any natural number. Then, in a context in which that binding is valid, on line 3, we then also bind m to any natural number. We now have a context in which both n and m are bound to arbitrary values of type nat. We cannot assume that m and n are bound to the same number. Finally, on line 4, we write n = m. We can write this without a syntax error because in the context in which we write it, both n and m are defined: as being bound to arbitrary values of type nat. Such bindings expire at the ends of the expressions in which they appear.

3.3.2. Interpretation¶

We interpret such a universally quantified proposition as asserting that some proposition or predicate, P, is true no matter what value of type, T, is bound to t. The proposition that every natural number, n, is either equal to zero or greater than zero, is an example. It is true in general (for every natural number, n) because it is true no matter what specific value we choose to bind to n.

3.3.3. Proofs¶

3.3.3.1. Introduction Rule¶

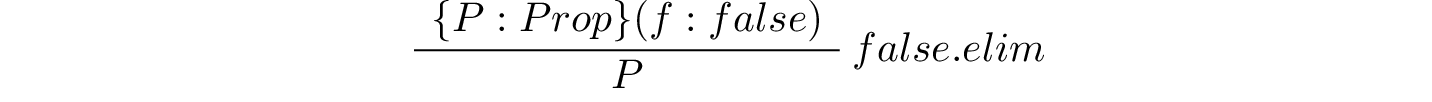

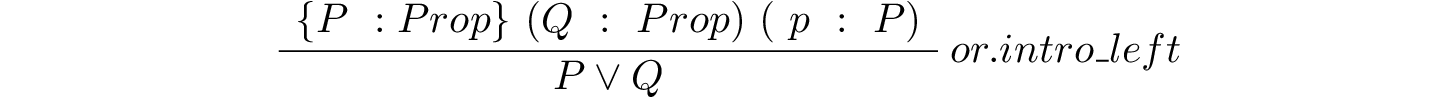

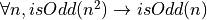

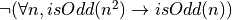

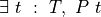

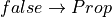

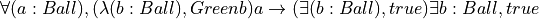

The inference rules of natural deduction for constructing and for using proofs of universally quantified propositions are defined in a way that enforces the intended meaning of universal quantification. For universal generalizations (universally quantified propositions) there is one introduction rule, a rule for constructing proofs of propositions of the form,  .

.

Informally stated, to construct a proof of  , one first assumes that t is some specific but otherwise unspecified value, of type T. In this context, if one then proves that the proposition, P t, is true, then one has show that P is satisfied for any value, t, of type T. That finishes the proof.

, one first assumes that t is some specific but otherwise unspecified value, of type T. In this context, if one then proves that the proposition, P t, is true, then one has show that P is satisfied for any value, t, of type T. That finishes the proof.

Here’s an example of this proof strategy in Lean.

1 2 3 4 5 | lemma neqn : ∀ n : nat, n = n :=

begin

assume n : nat,

exact (eq.refl n)

end

|

The keyword lemma on line 1 is just like def: it is a promise to bind a name, here neqn, to a value, of a particular type. Now remember that propositions are types. The type of proof to be constructed is thus  . The goal at the beginning of the proof script is to construct such a value, a proof, so that we can bind the name, neqn, to it. We construct this value incrementally using a tactic script, which starts and ends with begin and end. The first line of the script assumes that n has a specific, but otherwise unspecified value of type nat. In this context, what remains to be proved is n = n. This proposition is now of a form where we can (and do) use eq.refl to generate a proof of n = n. With nothing left to prove, we are done. We have a proof of

. The goal at the beginning of the proof script is to construct such a value, a proof, so that we can bind the name, neqn, to it. We construct this value incrementally using a tactic script, which starts and ends with begin and end. The first line of the script assumes that n has a specific, but otherwise unspecified value of type nat. In this context, what remains to be proved is n = n. This proposition is now of a form where we can (and do) use eq.refl to generate a proof of n = n. With nothing left to prove, we are done. We have a proof of  . Lean checks and accepts it as a valid value of the specified type, i.e., as a proof of the given proposition.

. Lean checks and accepts it as a valid value of the specified type, i.e., as a proof of the given proposition.

Be sure to step through the script with the Lean Messages window open. Notice how the assume tactic transforms the quantifier in the goal into an assumption in the context. What’s left behind is a remaining sub-goal. The subgoal is an equality, and so is proved by a different strategy (using a different inference rule), i.e., using eq.refl.

The remaining subgoal, the hole in the proof that remains to be filled, calls for a proof that that particular n is equal to itself. To this end we give an exact proof of n = n, namely, eq.refl n. Be sure to step through the script with the Lean Messages window open. Notice how the assume tactic transforms the quantifier in the goal into an assumption in the context. What’s left behind is a remaining sub-goal. The subgoal is an equality, and so is proved by a different strategy (using a different inference rule), i.e., using eq.refl.

3.3.3.2. Elimination Rule¶

An elimination rule tells you what you can do with a proof that you already have. What you can do with a proof of a universally quantified proposition is to apply it to a particular instance to obtain a proof that that instances has the property that all such instances have been proved to have. For example, if you have a proof that all frogs are green, and you know that Kermit is green, then you can apply the general proof, as if it were a function, to the specific value, Kermit, to obtain a proof that Kermit is green. This is how the elimination rule for the universal quantifier works.

axiom Frog : Type

axiom Kermit : Frog

axiom isGreen : Frog → Prop

axiom allFrogsAreGreen : ∀ f : Frog, isGreen f

lemma KermitIsGreen : isGreen Kermit := allFrogsAreGreen Kermit

To construct a proof of a universally quantified proposition,  , is, in effect, to have constructed a function: one that if given any value, t, of type, T, returns a proof of P. To use a proof of a universally quantified proposition, one applies it to a specific value, t, to obtain a proof that P is true for that specific t: a proof of the specialized proposition, (P t).

, is, in effect, to have constructed a function: one that if given any value, t, of type, T, returns a proof of P. To use a proof of a universally quantified proposition, one applies it to a specific value, t, to obtain a proof that P is true for that specific t: a proof of the specialized proposition, (P t).

For example, we can apply our proof, neqn, of ∀ n : nat, n = n, to the particular value, 3, to obtain a proof of 3 = 3. See the #check command in the following logic. The type of neqn 3 is 3 = 3, which indicates that neqn 3 is a proof of 3 = 3. Applying neqn to 4 would produce a proof of the proposition, 4 = 4. In summary, we can apply a proof of a universal generalization to a specific instance to obtain a proof for that specific instance.

lemma neqn : ∀ n : nat, n = n :=

begin

assume n : nat,

exact (eq.refl n)

end

#check neqn 3

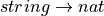

We can now make this idea precise in a completely general way. See the following code. We first assume that T is any type and P is any predicate taking an argument of type, T. We check to confirm that  is a proposition. Next we assume that we have a proof,

is a proposition. Next we assume that we have a proof,  , of

, of  , and that we have a value, t, of type T. Finally, we show that applying the proof,

, and that we have a value, t, of type T. Finally, we show that applying the proof,  , to the value, t, yields a proof that P is true for that t, i.e., in this case, a proof of the proposition,

, to the value, t, yields a proof that P is true for that t, i.e., in this case, a proof of the proposition,  . The reader is advised to compare and contrast this code with the preceding example of Kermit the Frog.

. The reader is advised to compare and contrast this code with the preceding example of Kermit the Frog.

axioms (T : Type) (P: T → Prop)

#check ∀ t : T, P t

axiom all_t_P : ∀ t : T, P t

axiom t : T

#check a t

#reduce a t

EXERCISE: Write Lean code to formalize the following argument. Suppose we have things that are balls. Further, suppose that being yellow is a property of balls. Moreover, assume that all balls are yellow, and that B is a ball. Prove that B is yellow.

3.3.3.3. Proofs of Universal Generalizations are Functions¶

The take-away message of this section is that proofs of universal generalizations (of universally quantified propositions) are functions. They are functions from values in the domain of quantification, to values that are proofs of given propositions: usually proofs of propositions about the values bound by the quantifier.

For example, a proof of the proposition,  , can be used as a function. It can be applied to a value, n, of type nat, and in this case it promises to returns a proof of the proposition, n = n, for whatever particular value of n it was applied to.

, can be used as a function. It can be applied to a value, n, of type nat, and in this case it promises to returns a proof of the proposition, n = n, for whatever particular value of n it was applied to.

Moreover, to construct a proof of a proposition, we have to show that if we are given an arbitrary value of the quantified type, which is to say an argument, that we can then construct and return a value of the promised return type: a proof of the proposition that comes after the comma. Remember, propositions are types and proofs are values. So we have to show that given any value of the argument type, we can construct and return a value of the resulting logical type, a proof.

We do this by, in effect, constructing a lambda abstraction. That we usually a proof script, rather than ordinary code, is secondary. Here, for example, another way to provide a proof of ,  , as a lambda abstraction term.

, as a lambda abstraction term.

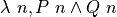

example : ∀ n : ℕ, n = n :=

λ k, eq.refl k

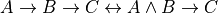

As should now be increasingly clear, the connection between proofs of universal generalizations, on one hand, and functions, on the other, is to close that they are … wait for it … the same thing. Indeed, the Lean function type,  is really a shorthand for

is really a shorthand for  ! A proof, or equivalently, a value, of this type is a function. To prove such a proposition, one must produce a function. To use such a proof, one applies it to a value to obtain a specialized proof for that specific value.

! A proof, or equivalently, a value, of this type is a function. To prove such a proposition, one must produce a function. To use such a proof, one applies it to a value to obtain a specialized proof for that specific value.

The following example in Lean further confirms what we have just said. If we assume that S and T are arbitrary types, and that pf_all is a proof of  , and if we then check the type of this proof, Lean reports it to be

, and if we then check the type of this proof, Lean reports it to be  . The proof of

. The proof of  , and if we then check the type of this proof, Lean reports it to be

, and if we then check the type of this proof, Lean reports it to be  is a function! If we then assume that x is some value of type, S, and if we apply pf_all to x, Lean tells us that, as predicted, we get a value of type T.

is a function! If we then assume that x is some value of type, S, and if we apply pf_all to x, Lean tells us that, as predicted, we get a value of type T.

axioms (S T : Type)

axiom pf_all : ∀ (s : S), T

#check pf_all

axiom x : S

#check pf_all x

One sees here, perhaps for the first time in this course, an incredibly close connection between logic and computations. In constructive logic, we have two basic mantras. First, propositions are types. Second, proofs are programs! Moreover, the type checker ensures that all such programs are of the right type: that proofs that are accepted are always valid proofs.

As we’ll see we need to be a bit inclusive when we say that proofs are programs. Some proofs are just data objects. Not every proof is a function. Proofs of “forall” propositions are functions, as are proofs of implications, as suggested strongly by the notation,  .

.

In any case, proofs in constructive logic are terms (values) that we can compute with. This fact is what makes it possible to automate logical reasoning in system such as our Lean proof assistant, in Coq, in Agda, and in other constructive logic proof assistants. We write code that can deal with propositions, predicates, proofs, just as easily as, and indeed mixed in with,code that deals with computational values of types such as bool, string, nat,  , and so forth.

, and so forth.

3.3.4. Natural Language¶

If you were given the task of proving a proposition of the form,  , in another class, you would probably not produce a formal proof in Lean. Instead, you would write an informal proof in mathematical English. You might write something like this. “To prove

, in another class, you would probably not produce a formal proof in Lean. Instead, you would write an informal proof in mathematical English. You might write something like this. “To prove  , we start by assuming that t is some specific but otherwise unspecified value of type T. We will show that P is true for this value. That will effectively prove that P is true for any possible value of t.”

, we start by assuming that t is some specific but otherwise unspecified value of type T. We will show that P is true for this value. That will effectively prove that P is true for any possible value of t.”

On the other hand, if already has a proof of  , and if one has a particular value, s, of type T, then it might be useful to derive a proof of

, and if one has a particular value, s, of type T, then it might be useful to derive a proof of  . One might write, “We know that

. One might write, “We know that  is true in general so it must be that case that it is true in the specific case where the value of t is s. Thus, from our proof of

is true in general so it must be that case that it is true in the specific case where the value of t is s. Thus, from our proof of  , we deduce a proof of

, we deduce a proof of  , in particular.

, in particular.

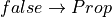

3.4. False¶

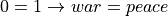

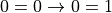

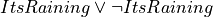

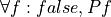

The simplest of all propositions is  . It is even simpler than true, because whereas

. It is even simpler than true, because whereas  has one constant proof term, true.intro, making it unconditionally true,

has one constant proof term, true.intro, making it unconditionally true,  has no proofs at all, making it unconditionally false.

has no proofs at all, making it unconditionally false.

3.4.1. Formula¶

In some writing, false is denoted as  , pronounced bottom. In Lean it is just written as false. Check it in Lean using the #check command. False is a proposition. Propositions are types. So false is also a type. And as a type, it has no values. There are no proofs of false. It is an uninhabited type.

, pronounced bottom. In Lean it is just written as false. Check it in Lean using the #check command. False is a proposition. Propositions are types. So false is also a type. And as a type, it has no values. There are no proofs of false. It is an uninhabited type.

#check false

3.4.2. Interpretation¶

We interpret  , as a proposition that can never be proved true. In the constructive logic of Lean, that means that there must be no proofs of it whatsoever.

, as a proposition that can never be proved true. In the constructive logic of Lean, that means that there must be no proofs of it whatsoever.

3.4.3. Introduction Rule¶

To enforce this interpretation, the type,  has no introduction rule. Without an introduction rule, no proof can be constructed. That is what makes

has no introduction rule. Without an introduction rule, no proof can be constructed. That is what makes  false.

false.

3.4.4. Elimination Rule¶

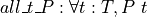

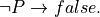

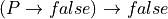

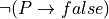

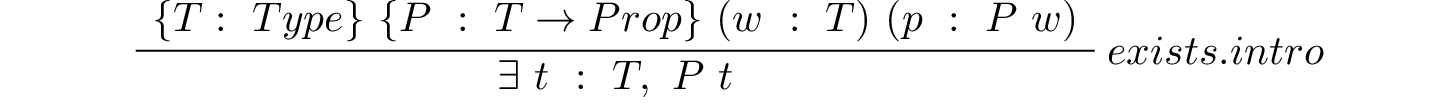

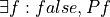

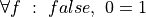

On the other hand, in a context in which one is assumed to have a proof of  , that proof is very handy because from it, a proof of any other proposition whatsoever can be derived. In other words, false being true implies that anything at all is true as well. This idea is formalized in the inference rule called false elimination. Given an assumed proof of

, that proof is very handy because from it, a proof of any other proposition whatsoever can be derived. In other words, false being true implies that anything at all is true as well. This idea is formalized in the inference rule called false elimination. Given an assumed proof of  and any other proposition, P, one can apply the apply the elimination rule for false to obtain a proof of P. In Lean this rule is called false.elim. Here is the rule in inference rule notation.

and any other proposition, P, one can apply the apply the elimination rule for false to obtain a proof of P. In Lean this rule is called false.elim. Here is the rule in inference rule notation.

You will notice that in writing the inference rule, we enclosed the declaration of the argument, P, in curly braces, rather than in parenthesis. That signals that when we apply this rule in Lean, we will not have to give a value for P explicitly. Rather, the value of P will be inferred from the type of proof to be produced (below the line), which is of course just P.

Here is a short piece of code in Lean showing the application of this rule. Lean supports type inference, and so here, as above, we can surround an argument with curly braces to tell Lean we don’t want to have to specify a value for that argument explicitly, but that Lean should infer the value from context.

1 2 3 4 | def proveAnything ( P: Prop ) (f: false) : P :=

(false.elim f)

axiom bad : false

#check proveAnything (0 = 1) bad

|

What this code shows is that if the proveAnything function were given any proposition, P, along with a proof of false, then it would return a proof of P. Given a proof of false, this function can be used to obtain a proof of any proposition. Lines 1-2 define this function. It takes a proof of  , f, and any proposition, P, as arguments, and promises to returns a proof of P, no matter how crazy P might be. Line 3 uses the axiom statement to assume that there is a proof of false, here named, bad. The final line shows that applying the function, proveAnything to this assumed proof of false and to the proposition, (0 = 1), returns proof of (0 = 1). If one has a proof of false, then one can then prove anything at all.

, f, and any proposition, P, as arguments, and promises to returns a proof of P, no matter how crazy P might be. Line 3 uses the axiom statement to assume that there is a proof of false, here named, bad. The final line shows that applying the function, proveAnything to this assumed proof of false and to the proposition, (0 = 1), returns proof of (0 = 1). If one has a proof of false, then one can then prove anything at all.

This conclusion, 0 = 1, is absurd, of course. But the assumption that there is a proof of  was absurd to begin with. It represents a situation that is impossible. If one finds oneself in such a situation in the course of logical reasoning, then the situation can basically be ignored, as it could not ever actually arise.

was absurd to begin with. It represents a situation that is impossible. If one finds oneself in such a situation in the course of logical reasoning, then the situation can basically be ignored, as it could not ever actually arise.

Now, you might ask, how can we write a function that takes an argument of a type, false, given that this type has no values? The answer is that such a function definition is fine; it will just never be possible to apply it, as there is no proof of  to which it could ever be applied. In writing the body of the function, we can ignore the fact that the function never be applied, and write the code as if arguments of the right types were provided. Within the body of a function, in other words, we assume that arguments of the right types have been given. From now on, think of declarations of the arguments to a function as axioms: facts that can be assumed within the body of the function. This is why we can apply false.elim to an assumed proof of false within the body of our function, even though no such value will ever really exist. Arguments are axioms when it comes to writing function bodies.

to which it could ever be applied. In writing the body of the function, we can ignore the fact that the function never be applied, and write the code as if arguments of the right types were provided. Within the body of a function, in other words, we assume that arguments of the right types have been given. From now on, think of declarations of the arguments to a function as axioms: facts that can be assumed within the body of the function. This is why we can apply false.elim to an assumed proof of false within the body of our function, even though no such value will ever really exist. Arguments are axioms when it comes to writing function bodies.

You can thus read our function as a piece of logic: if you can provide a proof of false and any proposition, P, this function will give you back a proof of P, no matter what P is. What the existence of this function shows is that  . The function works by applying the false.elim rule. This rule plays a vital role in reasoning about negations: propositions that assert that some state of affairs does not hold.

. The function works by applying the false.elim rule. This rule plays a vital role in reasoning about negations: propositions that assert that some state of affairs does not hold.

To conclude, from a proof of false one can derive a proof of anything at all. Logicians have naturally given a latin name to this principle: ex false quodlibet. From false, anything follows. This principle is sometimes also called the principle of explosion. While this inference rule might appear to be of little use since it relies on a proof of  , the reader will learn that this is actually a very powerful inference rule that is very useful, for example in proofs by contradiction!

, the reader will learn that this is actually a very powerful inference rule that is very useful, for example in proofs by contradiction!

3.4.5. Natural Language¶

Because there is no proof of  , there is no way to give natural language rendition of a proof of the proposition,

, there is no way to give natural language rendition of a proof of the proposition,  . If asked to do so, one could simply say, “As there is no proof of false, no proof can be given. The proposition is thus disproved.” On the other hand, if one is in a context in which a proof of false has been derived, and in this context one needs to produce a proof of some proposition, P, one can just say, “from our proof of false, anything follows, so the truth (and a proof) of P, in particular, follows.”

. If asked to do so, one could simply say, “As there is no proof of false, no proof can be given. The proposition is thus disproved.” On the other hand, if one is in a context in which a proof of false has been derived, and in this context one needs to produce a proof of some proposition, P, one can just say, “from our proof of false, anything follows, so the truth (and a proof) of P, in particular, follows.”

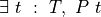

3.5. True¶

3.5.1. Formula¶

In predicate and constructive logic,  , sometimes written

, sometimes written  , and called top, is a proposition. In Lean it is just written as true. Here we use the check command in Lean to confirm that

, and called top, is a proposition. In Lean it is just written as true. Here we use the check command in Lean to confirm that  is of type

is of type  . It’s a proposition.

. It’s a proposition.

#check true

3.5.2. Interpretation¶

The intended meaning of this proposition is that it’s unconditionally true. In constructive logic, that means that there must be a proof of true that is always available.

3.5.3. Introduction¶

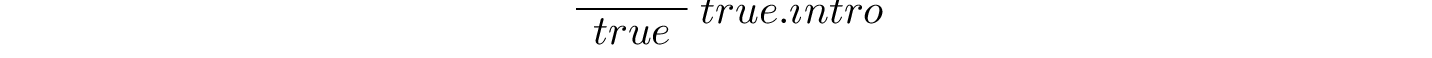

To this end, the rules of natural deduction provide one introduction (proof constructing) rule for true. It is called  . It takes no arguments and so imposes no conditions. It can always be used to obtain a proof of

. It takes no arguments and so imposes no conditions. It can always be used to obtain a proof of  .

.

A function that takes no arguments is a constant. So  can be seen as both a function, albeit one taking no arguments, and as a constant. It is a proof of

can be seen as both a function, albeit one taking no arguments, and as a constant. It is a proof of  . We confirm this in Lean by checking that the type of true.intro is true. The former is a proof of the latter.

. We confirm this in Lean by checking that the type of true.intro is true. The former is a proof of the latter.

#check true.intro -- true.intro is a proof of true

Here are a few examples using this rule.

lemma t : true := true.intro -- explicit type declaration

lemma t' := true.intro -- Lean can infer the type

#check t -- it's a proof of (type) true

#reduce t -- it's true.intro specifically

On the first line we declare the name t to be bound to a value of type, i.e., a proof of,  , and we prove that such a binding can be made by providing a proof of

, and we prove that such a binding can be made by providing a proof of  to the right of the :=, namely,

to the right of the :=, namely,  . The second line shows that we can rely on Lean to infer the type of t, here renamed to t’ to avoid name conflicts. The third line confirms that the type of t is

. The second line shows that we can rely on Lean to infer the type of t, here renamed to t’ to avoid name conflicts. The third line confirms that the type of t is  , which is to say that value of t is a proof of this proposition. The final line shows us that the actual proof value is, as expected,

, which is to say that value of t is a proof of this proposition. The final line shows us that the actual proof value is, as expected,  .

.

In the first two lines of the preceding example, we provided the required proofs of  by giving a proof value, true.intro, directly in the code. There is another way to provide proofs in Lean, which is often preferable. It’s benefit is that it allows you to construct proof objects incrementally. This technique uses what are called proof scripts to produce proofs. In lieu of an exact proof term, we provide a script enclosed in a begin-end pair. In this case, the script has just one line. It simply offers

by giving a proof value, true.intro, directly in the code. There is another way to provide proofs in Lean, which is often preferable. It’s benefit is that it allows you to construct proof objects incrementally. This technique uses what are called proof scripts to produce proofs. In lieu of an exact proof term, we provide a script enclosed in a begin-end pair. In this case, the script has just one line. It simply offers  as an exact proof of true. The name of the tactic in this case is exact. Bring this code up in your browser. Put your cursor on the begin. See how Lean starts by telling you what values you have to work with (none), and what you need of a proof of (true). As you step through the proof script, the obligation to produce such a proof is satisfied.

as an exact proof of true. The name of the tactic in this case is exact. Bring this code up in your browser. Put your cursor on the begin. See how Lean starts by telling you what values you have to work with (none), and what you need of a proof of (true). As you step through the proof script, the obligation to produce such a proof is satisfied.

-- prove lemma by constructing a proof interactively

lemma t'' : true :=

begin

exact true.intro

end

3.5.4. Elimination¶

Little of much use can be done with a proof of  . There is no elimination rule for true.*

. There is no elimination rule for true.*

3.5.5. Natural Language¶

Most working mathematicians and computer scientists still write natural language proofs. To give a (natural language) proof of the proposition,  , one could simply say, “The proposition,

, one could simply say, “The proposition,  , is trivially true. QED.” The QED stands for the Latin phrase, quod erat demonstratum, which simply means, “so it is shown.” It’s a traditional way of ending a proof in a way meant to signal that a valid proof is claimed to have been given.

, is trivially true. QED.” The QED stands for the Latin phrase, quod erat demonstratum, which simply means, “so it is shown.” It’s a traditional way of ending a proof in a way meant to signal that a valid proof is claimed to have been given.

3.5.6. Formalization (Optional)¶

In constructive logic, propositions are formalized as special types, and proofs of propositions are values of their types. Here is how the proposition,  , is defined as a type with one value, that is, one proof, called

, is defined as a type with one value, that is, one proof, called  . We give two definitions, the second of which (with a name change to avoid conflicts)differs only to show that Lean can infer the type of

. We give two definitions, the second of which (with a name change to avoid conflicts)differs only to show that Lean can infer the type of  as

as  . We will prefer to include explicit type declarations in such type definitions.

. We will prefer to include explicit type declarations in such type definitions.

namespace cs2102

inductive true : Prop

| intro : true

inductive true' : Prop

| intro

#check true

#check true.intro

#check true'

#check true'.intro

end cs2102

Consider the first definition, of  . The keyword, inductive, introduces a type definition. The name of our type is

. The keyword, inductive, introduces a type definition. The name of our type is  . It’s type is

. It’s type is  , which indicates that

, which indicates that  is a logical type, a proposition, rather than a computational type, such as

is a logical type, a proposition, rather than a computational type, such as  or

or  . Following the first line, we define the one constructor for values of this type (for proofs). Each constructor definition for a type is preceded by a vertical bar. The comes the name and type of the constructor. And that is it.

. Following the first line, we define the one constructor for values of this type (for proofs). Each constructor definition for a type is preceded by a vertical bar. The comes the name and type of the constructor. And that is it.

You note that we used the name intro for the constructors in both cases, without any conflicts. That is because each type definition establishes it own namespace, having the same name as the given type. The two intro terms are thus different terms. One is referred to as  ; the other, as

; the other, as  . We thus see now exactly where

. We thus see now exactly where  comes from. It is the one and only constructor for values of type

comes from. It is the one and only constructor for values of type  , i.e., for proofs of this proposition. Taking no arguments, it is also a constant: the one and only proof of

, i.e., for proofs of this proposition. Taking no arguments, it is also a constant: the one and only proof of  . We will see constructors that take arguments later.

. We will see constructors that take arguments later.

Kevin: see actual Lean libraries to confirm details.

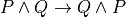

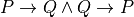

3.6. Conjunction¶

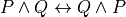

If P and Q are propositions, then so is  . In such a situation, if math:P is true and if

. In such a situation, if math:P is true and if  is also true, then it must be the case that the proposition,

is also true, then it must be the case that the proposition,  is true and

is true and  is true, is also true. This intuition is expressed formally in logic using the logical connective,

is true, is also true. This intuition is expressed formally in logic using the logical connective,  . It is pronounced and. In Lean, you can create this symbol by typing

. It is pronounced and. In Lean, you can create this symbol by typing \and followed by a space.

3.6.1. Formula¶

To formally express the proposition that  is true and

is true and  is true, we write

is true, we write  . Such a proposition is called a conjunction. The following code confirms that if we assume that

. Such a proposition is called a conjunction. The following code confirms that if we assume that  and

and  are propositions, as we do on the first line, then

are propositions, as we do on the first line, then  is a proposition. So is and P Q. And these are in fact the same proposition, just written using two different notations.

is a proposition. So is and P Q. And these are in fact the same proposition, just written using two different notations.

axioms P Q : Prop

#check (P ∧ Q)

#check (and P Q)

3.6.1.1. Infix, Prefix, and Postfix Notation¶

The symbol,  , appearing between two conjuncts, P and Q, is really just a shorthand notation for the function application term, and P Q. An expression in which an operator (i.e., a function name, such as

, appearing between two conjuncts, P and Q, is really just a shorthand notation for the function application term, and P Q. An expression in which an operator (i.e., a function name, such as  ) appears between its operands is said to be in infix notation. If the operator in an expression appears before it’s operands, the expression is said to be in prefix notation. Operators can also appear after their operands. Expressions involving the factorial function are generally written in postfix notation. So, for example, the notation, 5!, can be understood as shorthand for the function application term, fac 5. When an operator appears after its operand, the expression is said to be written using postfix notation.

) appears between its operands is said to be in infix notation. If the operator in an expression appears before it’s operands, the expression is said to be in prefix notation. Operators can also appear after their operands. Expressions involving the factorial function are generally written in postfix notation. So, for example, the notation, 5!, can be understood as shorthand for the function application term, fac 5. When an operator appears after its operand, the expression is said to be written using postfix notation.

3.6.1.2. Type Constructors¶

Viewed as a function, and is rather special. It’s a function that takes two propositions, P and Q, which are types, as arguments, and it returns the proposition, the conjunction,  and

and  , as a result, which is another type. The

, as a result, which is another type. The  operator is another name for this function. We call such a function a type constructor, because it takes types as arguments (remember propositions are types) and returns a type as a result.

operator is another name for this function. We call such a function a type constructor, because it takes types as arguments (remember propositions are types) and returns a type as a result.

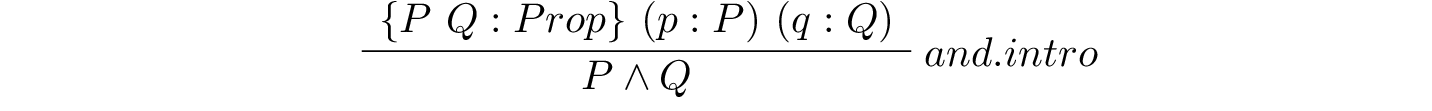

As we will see later in more depth, such a type constructor has an associated set of zero or more rules for constructing values of the constructed type. For example, and.intro, is just such a rule. By rule, what we mean is function for constructing values of a type built using a type constructor. We will call such functions value constructors (or, often, just constructors). The and.intro rule is just such a value constructor. It provides a means for constructing proof values for conjunctions (which are propositions and therefore types) that were themselves constructed using the and type constructor.

Be sure you see the essential difference betwen type constructors and value constructors. Here, and, is a type constructor; and.intro is a value, or proof, constructor.

3.6.2. Interpretation¶

We interpret a conjunction,  , as asserting that both

, as asserting that both  and

and  are true. In the logic of Lean, that means there must be a proof of

are true. In the logic of Lean, that means there must be a proof of  and there must be a proof of

and there must be a proof of  . The proof construction rule for conjunctions, i.e., the introduction rule for and, strictly enforces this interpretation.

. The proof construction rule for conjunctions, i.e., the introduction rule for and, strictly enforces this interpretation.

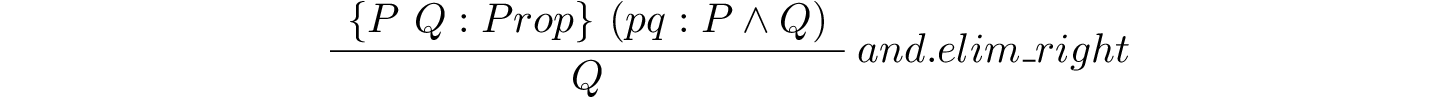

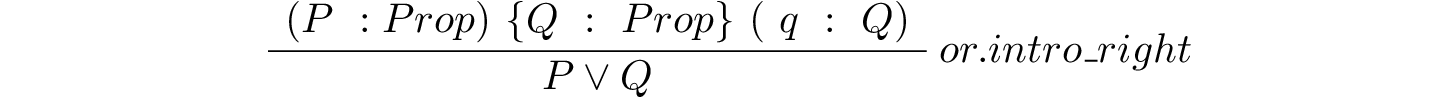

3.6.3. Introduction¶